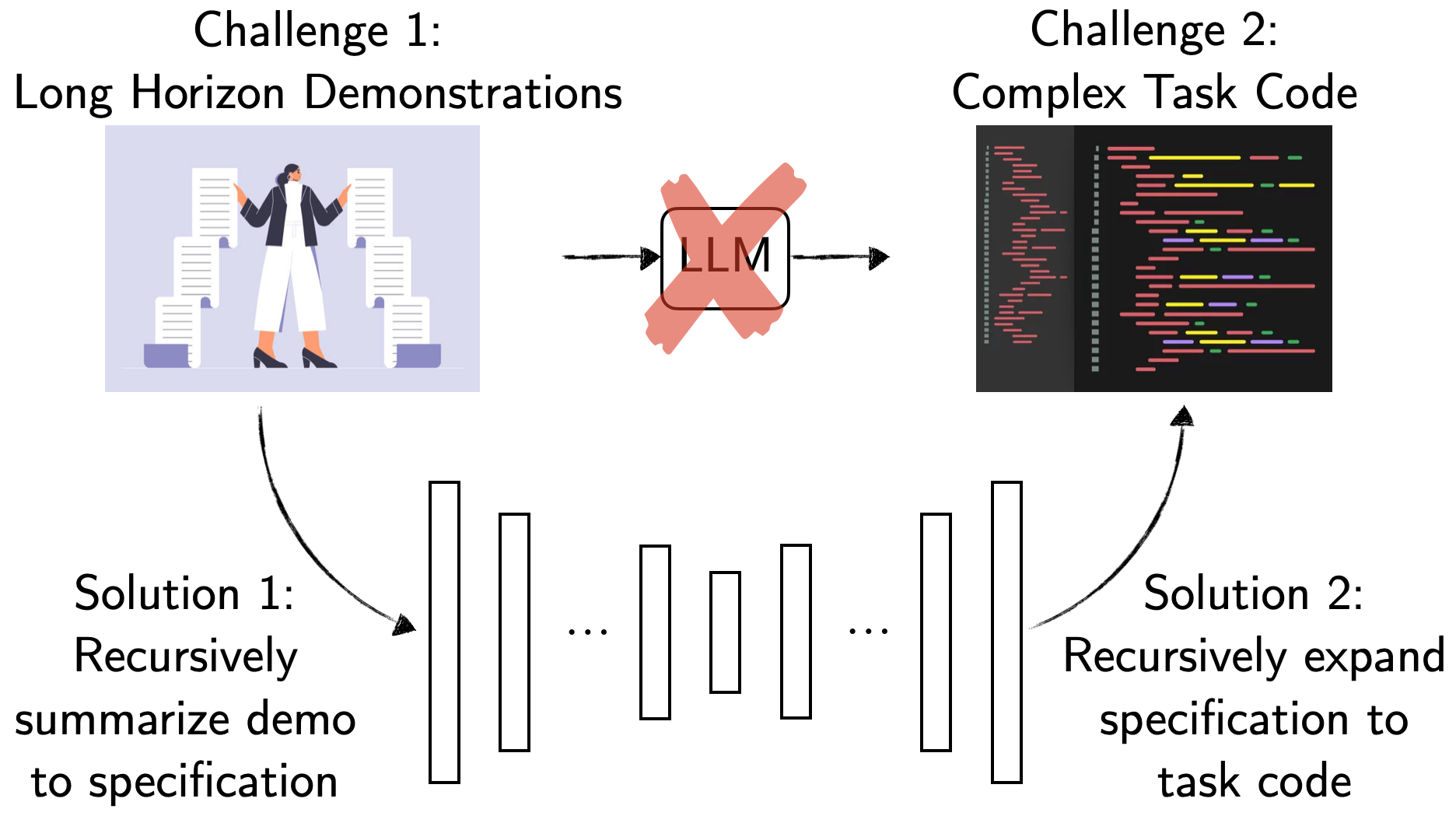

Language instructions and demonstrations are two natural ways for users to teach robots personalized tasks. Recent progress in Large Language Models (LLMs) has shown impressive performance in translating language instructions into code for robotic tasks. However, translating demonstrations into task code continues to be a challenge due to the length and complexity of both demonstrations and code, making learning a direct mapping intractable.

This paper presents Demo2Code, a novel framework that generates robot task code from demonstrations via an extended chain-of-thought and defines a common latent specification to connect the two. Our framework employs a robust two-stage process: (1) a recursive summarization technique that condenses demonstrations into concise specifications, and (2) a code synthesis approach that expands each function recursively from the generated specifications. We conduct extensive evaluation on various robot task benchmarks, including a novel game benchmark Robotouille, designed to simulate diverse cooking tasks in a kitchen environment.

Demo2Code generates robot task code from language instructions and demonstrations through a two-stage process.

In stage 1, the LLM first summarizes each demonstration individually. Once all demonstrations are sufficiently summarized, they are then jointly summarized in the final step as the task specification.

In the example, the LLM is asked to perform some intermediate reasoning (e.g. identifying the order of the high-level action) before outputting the specification (starting at "Make a burger...")

In stage 2, given a task specification, the LLM first generates high-level task code that can call undefined functions. It then recursively expands each undefined function until eventually terminating with only calls to the existing APIs imported from the robot's low-level action and perception libraries.

In the example, the function cook_obj_at_loc is an initially undefined function that the LLM calls when it first generates the high-level task code. In contrast, the function move_then_pick is a function that only uses existing available APIs.

We can successfully complete various tasks like cooking, tabletop manipulation and dish washing while accommodating to a user's preferences.

Demo2Code is compared against two other methods.

Demo2Code can generate accurate policies for language instructions with preferences implicit in demonstrations

In the first example here, Demo2Code successfully extracts specificity in tabletop tasks. Although the language instruction just ambiguously says "next to", it correctly infers from the goal is "left of" from the demonstrations. Other baselines and methods fail to infer this.

User 22 to first scrub objects one at a time, then rinse them one by one.

In contrast, User 30 prefers to scrub and rinse each object one by one.

Demo2Code can capture and translate these implicit preferences to code.

Huaxiaoyue Wang, Gonzalo Gonzalez-Pumariega, Yash Sharma, Sanjiban Choudhury

We sincerely thank Nicole Thean (@nicolethean) for creating our art assets for Robotouille!